This year, I had the luck to attend AWS re:Invent in Las Vegas, the “grand-messe” of Amazon’s Cloud.

Despite the very ambitious agenda that I planned, I was able to attend all my booked sessions and keynotes (at the cost of dozens of kilometers of walking, but I’ll come back on it later ^^), and I will try do give in this blog post most of the outcomes from those passionating discussions.

I will split this (long) post in 4 parts :

- General and main announcements from AWS re:Invent 2024

- Review and outcomes of the technical sessions I attended

- Advices for future re:Invent attendees

- Some pictures !

General and main announcements from AWS re:Invent 2024

Most of the main announcements from this year’s AWS re:Invent were directly or indirectly linked to AI.

The most important one being the availability of EC2 Trn2 instances, each embarking 16 Trainium 2 chips, with 192 vCPUs, 2 TiB of memory, 3,2 Tbps of network bandwidth through EFA (Elastic Fabric Adapter) v3.

–> Amazon EC2 Trn2 Instances and Trn2 UltraServers for AI/ML training and inference are now available

More linked to my predilection domains, below are the list of the most important announcements from this year re:Invent (knowing that some of those were announced a few weeks prior to the event) :

–> Use your on-premises infrastructure in Amazon EKS clusters with Amazon EKS Hybrid Nodes

–> Introducing queryable object metadata for Amazon S3 buckets (preview)

–> Introducing default data integrity protections for new objects in Amazon S3

Review and outcomes of the technical sessions I attended

Below is the list of all sessions I managed to attend during re:Invent 2024, and part of the notes I took during those.

I’ll put a link to the video recordings of each of those sessions (when available), if you want (and have time) to get more details !.

I’ll just put the notes here exactly as I took it on my paper tablet, so don’t expect to have the outcomes in the form of a clear and detailed paragraph (BTW, I recently got this one as a gift, and this is the best thing ever if you regularly take handwritten notes).

(Monday) NET402 : EC2 Nitro networking under the hood

This was the first session of the week, and probably the one I was the more excited to attend, as I am really passionated by this specific technology.

Nitro is at the foundations of AWS virtualization and networking. It marked a turning point for AWS, since it was the beginning of manufacturing their own silicons to fully meet their performance and growth needs.

Below are my notes from this session, as-is :

Reminders :

- Back to 2017, up to 25% of the compute capacity of EC2 hosts were consumed by networking / security / storage processes

- Nitro silicon started in 2013, with Anapurna Labs. Nitro cards offload most of the work to dedicated hardware, while keeping only a lightweight hypervisor in charge of resources management directly on the host

- All instances starting c4 benefits from Nitro system. Current generation of Nitro chips is v5, used by all c7 instances

- More than 750 instance types are virtualized using the Nitro system

- Networking : ENA are presented to the instances as PCIe devices by the Nitro cards, with bandwidth up to 200 Gbps (EFA goes up to Tbps)

Interesting outcomes :

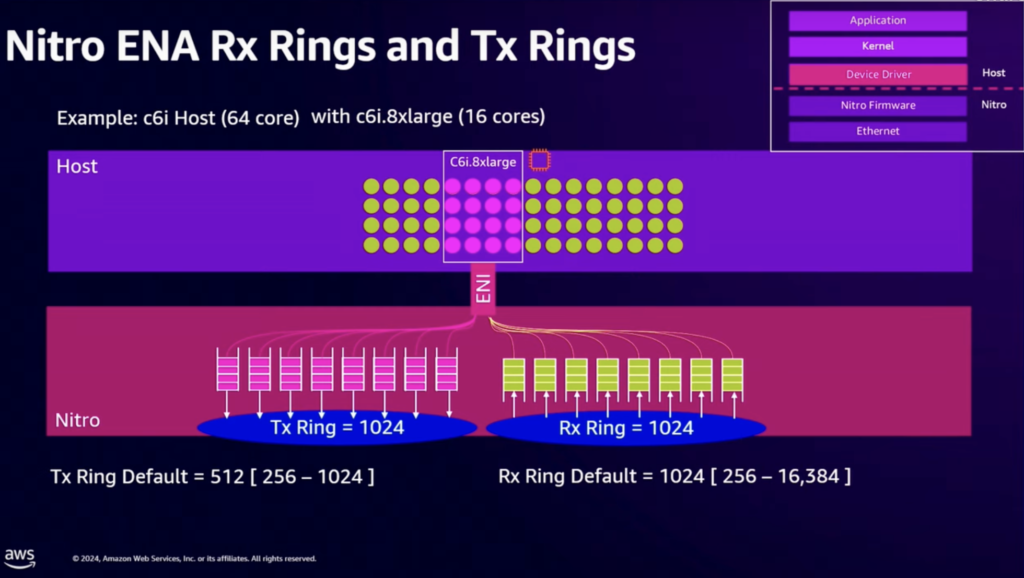

- Each ENI created by the ENA gets several Queue buffers (Tx/Rx), with a ring consuming those queues (for Rx, this is Receive Side Scaling, which can be disabled)

- 5 tuple of the host traffic (src IP / dest IP / protocol / source port / dest port) is used for queue and processor assignment at the Nitro card level

- Routing / NACL / SG checks are applied directly by the Nitro card on the host sending traffic

- The mapping service is used to find the underlaying IP address of the destination host (other Nitro host, or blackfoot edge device)

- An adjacency is kept in cache by the Nitro card for each destination resolved using the mapping service

- There's a conntrack (connection tracking) entry cached by the Nitro card for each and every flow

- Because of destination resolution and conntrack, first packet has more latency than following ones

Single flow specifications :

- 5 Gbps for nominal / external flow

- 10 Gbps for Cluster Placement Group for TCP, 8 Gbps for UDP

- 25 Gbps for ENA Express : uses Enhanced Congestion Control Algorithm, sending a single flow through multiple fabric nodes, and offloads the reassembly and ordering to the receiving Nitro card

Micro bursting :

- Packets arrival rate exceeds packets processing rates. Partial full queue : delay. Complete full queue : drops.

- Prevention : congestion control algorithms

- Traffic Control : permits to control the amount of packets than can be sent by the Kernel to the Tx queue

- ENA Express (launched in 2022) : 25 Gbps with built-in congestion control, available only local to an AZ

VPC Mutations :

- NACL are stateless

- Security Groups : Untracked connections --> flows open with 0.0.0.0/0 or ::/0 are untracked (except for ICMP, which is always tracked)

- Updating SG, moving from tracked to tracked logic is OK. Moving from untracked to tracked can break the connection

- When running a firewall on a Nitro instance, it's better to allow all flows (untracked SG statement) to improve performance (avoiding connection tracking by Nitro), also knowing that there's a maximum number of conntrack entries per instance

Large flows optimization :

- Using GRE or IPsec tunnels cause the queue / processor selection to happen on a 3-tuple (instead of 5-tuple)

- Better to use UDP tunneling protocol, with source port variation per flow (VxLAN, Geneve, GTP, MPLSoUDP)

- Being able to horizontally scale at Nitro level (by assigning flows to different queues) also impact instance performance, as queues are mapped with the different instance vCPUs

Burst allowance :

- General purpose instances accumulate credits when being below their nominal bandwidth, which allows bursts, by consuming those accumulated credits

- Nitro cycles are shared with the hosted instances with the same ratio then the number of vCPU allowed to the instance on the host (%age)

- An instance having 25% of vCPU allocated on the host will get 25% nominal Nitro resources

- A neighbor instance taking advantage of burst allowance can sporadically consume more than the allowed Nitro resources, while Nitro will make sure all instances has the necessary bandwidth

Packets per second :

- This is a global metric global to the instance (for all queues)

- Even having a spread of traffic among multiple queues will not permit to overcome this limitation

- The enhanced ENA metric "pps_allowance_exceeded" exposed by the driver through ethtools permits to check if this restriction is hit

Performance tuning :

- Use placement groups to make instances "closer to each other" on a placement group in a single AZ

- Drivers : DPDK (DataPlane Development Kit) permits to treat packets in the user space, to limit context switching by having it treated by the kernel

- Use UDP among TCP

- Latency : poll-mode operation makes the kernel polling the Nitro queues to treat packets instead of using interrupts. Different levels, from C0 to C6, with C0 consuming more power, but offering the minimum latency

- [Dynamic] Interrupts moderation : driver parameter which permits to add some latency to the Nitro interrupts sent to the system when receiving packets

- RSS : Receive Side Scaling can be "tuned" starting Nitro v3 (instances version C5n) to map specific flows to specific queues

- ENA Flow Steering / N-Tuple filtering (starting Nitro v5 / instances 8th generation) : map specific flows to queues with ethtools

- ConnTrack timers can be configured

Performance and scaling metrics :

- ethtools displays conntrack_allowance_available and conntrack_allowance_exceeded information (can be exported with the CloudWatch agent to have it on the CloudWatch monitored metrics with alarms)

Action plan for optimizing Nitro throughput :

1) Know your traffic types (whale flows, TCP/UDP flows enthropy, single VS many flows)

2) Know the traffic type's profile (PPS, BPS, CPS)

3) Choose your instance type (Baseline and burst specs, Nitro perf capabilities, density of ENIs)

4) Tune and monitor your instances (driver, ethtools, VPC metrics)

(Monday) NET403 : Planet-scale networking : How AWS powers the world’s largest networks

Main principle is OWNERSHIP. Custom hardware, control-plane, transceivers, fibers.

"Be so performant and reliable that we are out of the way of your workloads"

Design goals are to be FLEXIBLE, to adapt to future needs (ie : AI, with introduction of UltraClusters needing Petabits/s throughput with super low latency)

Each team lives at a layer of "The Stack", but is free to talk to upper layers to understand what are their future needs and expectations.

Physical security : at the basis of everything. AWS makes sure to provide the right tools to ground engineers to make sure they can do their job without causing any issue.

Second design goal is CONSISTENCY.

Third design goal is RESILIENCY (plan for failure, create multiple options, choose the best place)

Network Design Principles :

Automate as much as possible (configuration, telemetry, traffic engineering) AWS Network = 850 raw events per second / 2.4 human engagements per hour

Should fail seldomly and in a predictable way (blast radius contention)

Avoid surprises : don't reach unprecedented scale (+ light on Beluga)

Network Designs = Distributed systems design (a router is only a computer with a fancy network card)

Inbound traffic engineering made 7x faster mitigations for inbound traffic congestion (usually more than 1h now to less than 10 minutes)

(Monday) NET401 : Optimizing ELB traffic distribution for high availability

Client Connectivity to load balancers :

Route 53 (always on for ELB)

CloudFront (distribute cacheable assets / offload direct to S3 / Lambda / WAF at the edge)

Global Accelerator (Reduce Latency, 2 global static IPs, TCP/UDP/HTTP) --> enter the AWS backbone as close as possible from the user

Global Accelerator uses the health-checks results from ELB or Route53 to send traffic to target groups

If no target groups are healthy --> traffic is sent to all target groups

NLB IPs are allocated at location (1 per AZ), and do not change during the lifetime of the NLB

ALB can have multiple IPs per AZ (scale up horizontally), and they can change

ELB can rely on Anomaly Detection to replace a node which seems to have wrong behavior (after gracefully removing it from the targets groups)

LB instance selection is only performed by the client, based on DNS resolution and result list choice

Client DNS best practices :

- refresh DNS when connection breaks

- use exponential backoff and jitter

- respect TTL values

- try other IPs

Cross-zone can temper issues when having uneven selection of ELB IPs, by sending traffic to targets in all AZs having targets

Target selection :

- on ALB, rules are evaluated from top to bottom

- most used rules might be placed higher in the rules list for better performance

default rule should redirect / reject unexpected requests (public ELB can receive a lot)

- stickiness for HTTP : adds an encrypted cookie

- LB algorithms : round-robin / LOR (Least outstanding request) / WR (weighted random), eventually with "anomaly detection" to adjust the weight of each target based on HTTP response codes and TCP errors, with RD/SI (Rapid Decrease / Slow Increase)

On NLB, target selection is performed by using a 6-tuple hash for TCP (src-ip/dest-ip/protocol/src-port/dest-port/seq number)

Traffic can go through a NLB while preserving the client source-IP thanks to Hyperplane / Nitro connection tracking

For UDP, initial sequence number is not used in the tuple hash calculation to select the target. It is then important to make sure that clients sending UDP traffic to the NLB are configured to diversify their source-port to be able to load-balance the flow to multiple targets

On GWLB, use of 5-tuple for TCP flows, 3-tuple for non-TCP flows

ELB Capacity Unit Reservation :

- by default, ELB scales to virtually any workload. Scale-up at 35% CPU, scale-down at 15% CPU with 12h cooldown.

- ALB scales on multiple dimensions (instance number and size)

- NLB and GWLB scale independently per AZ

- ELB CU reservation increases the minimum capacity of the ELB, starts provisioning it immediately, does not limit further scaling. Available for NLB and ALB

When to use it ?

- traffic shift / migration

- planned events

- monday's

- intermittent spiky traffic

PeakLCUs CloudWatch metric can be used to check the number of provisioned ELB instances

Interesting links :

– NEW : Introducing NLB TCP configurable idle timeout

– NEW : Load Balancer Capacity Unit Reservation for Application and Network Load Balancers

(Monday) CMP301 : Dive deep into the AWS Nitro system

Nitro silicon started in 2013.

AWS started build their own chips for : specialization / speed / innovation / security (Now done for Nitro, Graviton, Trainium)

5 generations of custom chips

Nitro hypervisor built for AWS. Each network card has its dedicated function (controller, networking, storage)

Nitro networking cards performs :

- VPC data-plane offload (ENI attach, SG, flow logs, routing, DHCP, DNS...)

- VPC encryption (AES256 end-to-end)

- ENA (Elastic Network Adapter)

- EFA (Elastic Fabric Adapter)

SRD is available starting Nitro v3 (instances c5n) --> Scalable Reliable Datagram. Makes use of multiple paths of the underlying network for a single flow

ENA Express : BW increase x5 (5Gbps to 25Gbps) + 85% latency reduction

The hypervisor software is very lightweight (compared to 25% host compute resources which were needed prior to Nitro offloading)

It is now only in charge of basic instances management and memory / CPU allocation, which permits to reach bare-metal like performance.

Security :

- encryption : all flows between the Nitro components + control plane are encrypted

- secure boot is ensured by the Nitro controller

- patching : all components can be upgraded with zero downtime

- no remote access (SSH, etc)

- only encrypted API integration for control-plane Interesting links :

– The Security Design of the AWS Nitro System

(Monday) NET301 : Advanced VPC Designs and what’s new

Status of AWS backbone :

34 regions

41 local zones

141 DX POPs

> 6 000 000 miles of fiber Interesting links :

– NEW : Amazon Virtual Private Cloud launches new security group sharing features

– NEW : Enhancing VPC Security with Amazon VPC Block Public Access

– NEW : Introducing security group referencing for AWS Transit Gateway

– NEW : AWS Direct Connect gateway and AWS Cloud WAN core network associations

– NEW : AWS announces UDP support for AWS PrivateLink and dual-stack Network Load Balancers

– NEW : AWS PrivateLink now supports cross-region connectivity

(Monday) KEY001 : Monday Night Live with Peter DeSantis

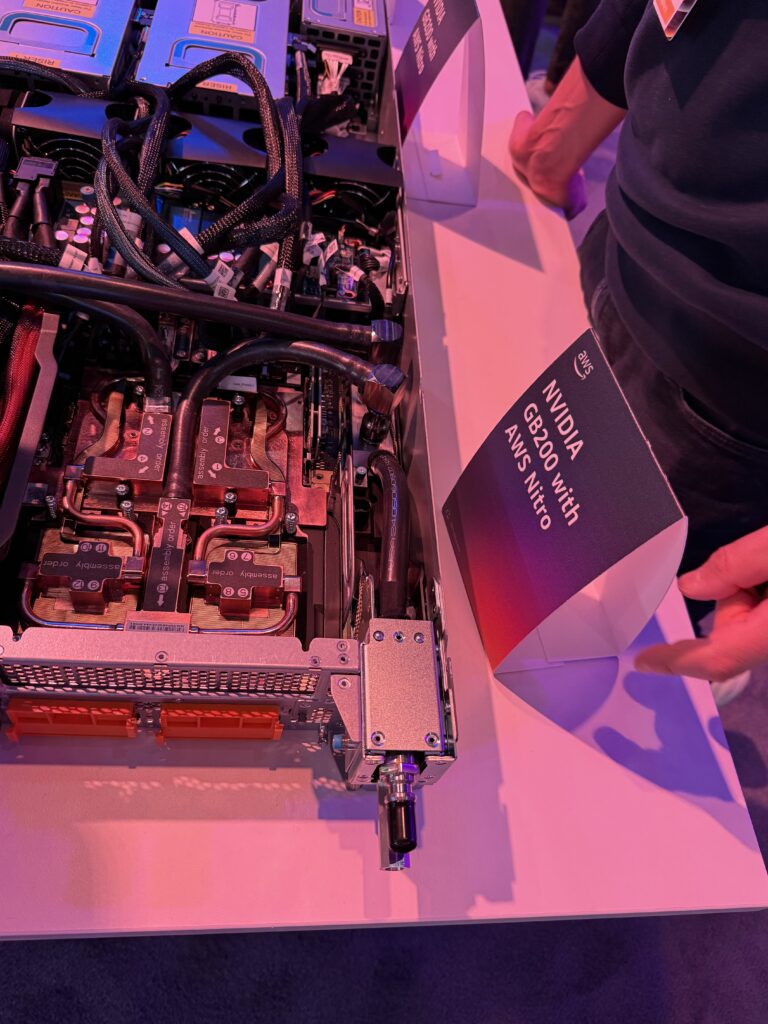

Tons of announcements, mainly related to AI (Trainium 2, 10p10u, UltraServers 2, project Rainer)

Interesting fact : they developed their own routing protocol (SIDR) to answer their specific needs of convergence speed.

(Tuesday) API304 : Building rate-limited solutions on AWS

(Video is not available)

Throttling is not rate-limiting :

- throttling more relates to admission control for the called service (ie : returning HTTP 429 and letting the caller decide what to do next)

- rate-limiting is more into making sure that downstream services will not be overloaded by the called service

API Gateway permits fine-grained throttling (asked engineer if there could be a way to throttle per source IP / per path, and not only per source IP)

Max concurrency (introduced in 2023) permits to limit the number of Lambdas instantiated by the SQS consumer, avoiding reaching the Reserved Concurrency for a given lambda (which would cause the SQS message to go back to the queue continuously).

Minimum value for Max concurrency is 2.

Workaround to have a Max concurrency of 1 : use a FIFO queue with the same batch-id on all messages sent to the queue.

KDS (Kinesis Data Streams) (works with shards of streams, not “messages”).

Shards can limit using throughput values (bytes per second).

By default, 1 lambda per shard. Parallelization Factor can be used to have up to 10 Lambdas per shard.

Lambda is made for data transformation, not for data transportation. (Tuesday) DAT406 : Dive deep into Amazon DynamoDB

DynamoDB offers predictable, low-latency at any scale.

It uses horizontal partitioning based on primary key :

- scale horizontally, by splitting data based on hash chunks (data replicated on 3 AZs with the same distribution)

- 1 leader replica is elected for each partition, being the one always having the most recent data (only leader can be used for writes)

Data is automatically re-splitted as soon as the partitions size goes above 10GB (in average, also depends of other factors).

This size has been chosen as it is the good one to perform recovery operations or re-splitting on existing partitions with in a efficient way.

Limits have been set to 1 000 writes per second / 3 000 reads per second, per partition. If IOPS goes above it, AWS can automatically create new partitions to handle it.

Every data on DynamoDB is encrypted (either with customer-provided key, either with AWS generated key).

Request routers are stateless.

Traffic today : 100s of tables over 200TB each, 100s of tables over 500 000 RPS each. +1M customers.

Prime Day 2024 (alone) : 146M RPS (at peak), 10s of trillions of requests

Design goal today : locate partition and replicas in 10s of µ seconds.

Finding it that quickly necessitates a smart caching / polling design.

They created MemDS (which is a in-memory cached data structure).

- each request router embarks a cache with the latest storage node info for a given DB partition

- each time a request router cache is hitted, it then requests 2 MemDS nodes to update its cache

- when a storage node triggers a split, it creates a new versioned info in MemDS

- creation of a new table triggers the control plane to explicitly publish it (not waiting for partition publisher polling)

Key takeaways :

- Use / build caches knowing they are eventually-consistent, and embrace it

- Long-lived connections benefits from request routers caches and avoids repeated TLS handshakes

- DynamoDB-shell provides a simple CLI access to DynamoDB modeled on mySQL CLI

Interesting links :

– NEW : Amazon DynamoDB global tables previews multi-Region strong consistency

– GitHub repo : DynamoDB-shell

(Tuesday) STG210 – AWS storage best practices for cost optimization

(Tuesday) CMP411 – Everything you’ve wanted to know about performance on EC2 Instances

(Video is not available)

Reminders of instance types :

- General purpose : T, M, Mac

- Compute optimized : C (dedicated CPU)

- Memory optimized : R, U, X, Z

- Accelerated computing : F, G, P, DL, VT, Trn, Inf

- Storage optimized : D, H, I

- HPC Optimized : Hpc

Flex instances offers 20% price decrease among “standard” equivalents.

- 95% of the time, instances can get up to the full CPU performance

- 5% of the time, instances may need to wait a few milliseconds to get the desired CPU

AWS does NOT plan to move to 100% Graviton CPU (current Graviton is 4th generation).

Current processor trends :

Core count increase

$ / vCPU increase, $ / perf decrease, perf / watt increase

Nitro : Metal instances use nitro cards too (ie : Mac), but also giving the ability to deploy a custom hypervisor on it.

Host servers at AWS are called “droplets” (we are in the cloud… 🌧️)

Using bigger instances when struggling does not necessarily improves performance :

Fair-share on EC2 burstable instances (T) permits to eventually have “more” than we are paying for, when collocating instances do not use it.

Moving to bigger instances with pinned vCPUs makes it strictly limited to this performance.

SMT (Simultanous Multi-Threading) is available on Intel-based instances, and AMD until 6th generation.

There’s no SMT on Graviton-based instances.

Higher performance → each vCPU is mapped to a physical core, not to a virtual thread.

IE : 50% performance increase between M6a (AMD with SMT) and M7a (AMD without SMT), as reaching 50% of CPU usage on M6a means starting again scheduling to the 1st vCPU / 2nd thread.

While SMT disabled instances are more expansive, they offer better performance, which avoids needs of scaling out early = less costs.

On big instances (ie : 32xl), memory is not flat :

- they have multiple CPU sockets, with each socket having its own memory slot bunch and MMU

- that’s also true for the PCIExpress cards (Nitro)

- lscpu command shows the sockets and SMT status + NUMA (mapping of cores to memory domains)

- A core on 1st CPU needing access to the memory domain or network needs to go through the other CPU, which adds some penalty

A few tricks :

- For Xen-based instances, use the TSC clock source. For Nitro instances running Linux, use the PTP clock exposed (Precision Time Protocol)

- Services using link-local address are limited to 1024 PPS, it includes DNS, NTP, IMDS (Instance Meta-Data Service)

- AWS aperf permits to collect profiling data and generate reports. Graviton instances offers better view on profiling metrics Interesting links :

– GitHub repo : AWS aperf

(Wednesday) GHJ301 – AWS GameDay : Developer experience (sponsored by New Relic)

(Gamified learning / no video available)

Challenge oriented into the following technologies and AWS services :

– Kubernetes / EKS

– Go / Python (Lambda)

– IAM

– SQS

(Wednesday) CMP320 – AWS Graviton : The best price performance for your AWS workloads

(Video is not available)

Why custom chips ?

- Specialization

- Speed

- Innovation

- Security

Graviton CPUs are built to deliver the best performance specifically for the AWS customer-types

Workloads, not to reach the higher score on a given benchmark

Graviton 3 added support of DDR5, with 50% bandwidth increase compared to DDR4 (used by 7th

Generation instances)

Graviton 4 : 50% more cores, 2x cache, 75% more memory bandwidth (used on 8th gen instances)

(Performance x4 compared to Graviton 1)

Graviton consume 60% less energy compared to equivalent third-party processors for the same compute power

Graviton 4 encrypts traffic with :

- DRAM

- Nitro cards

- Coherent link (between sockets)

(Thursday) KEY005 : Dr. Werner Vogels Keynote

“Plan for failures, and nothing will fail”

“Complexity can neither be created nor destroyed, just moved somewhere else”

Complexity at AWS is removed from customers, and managed internally.

Complexity != number of components : a bicycle as easier to ride than a monocycle

Lessons learned in SIMPLEXITY :

1) Make evolvability a requirement

(Software system ability to easily accommodate to future changes)

Do not ignore the warning signs (analogy with fogs put in heated water)

CloudWatch was initially “small”. It has evolved, has been rewritten multiple times, and splitted into a lot of smaller services all handling different functions, as it has become an “anti-pattern” (too big).

CloudWatch today : 665 Trillions entries every day / 440 PB ingested data (every day also)

2) Break complexity into pieces (including teams)

“Successful teams worry about not functioning well”

Give team “agency” : don’t tell them what to do, just give them problems with the associated importance and criticality.

3) Align organization with architecture

4) Organize into cells

Split services into cells. The cell should be big enough to handle the biggest workload you can imagine

But it should be small enough to be tested with a full-scale workload.

AWS : What is a cell-based architecture ?

5) Design predictable systems

Example 1 : Load-balancers get their config from S3 every second. It avoids spikes, backlogs and bottlenecks, and allows self-healing and is fully predictable.

Example 2 : Route53 is not directly impacted by health-check status changes. Health-checkers fleets pushes changes to aggregators, which pushes this data on a central in-memory table. Route53 checks this table only when (and each time) a DNS request is received.

6) Automate complexity

Example : > 1 Trillion DNS requests are analyzed for security every day. 124 000 malicious domains identified on a daily basis.

Automation makes complexity manageable.

Interesting links :

– What is a cell-based architecture?

(Thursday) SEC304 : Mitigate zero-day events and ransomware risks with VPC egress controls

(Workshop : no video available)

Guided lab, around VPC outbound DNS filtering, AWS firewall with Suricata rules, TLS decryption.

(Thursday) re:Play

Find some pictures at the end of this post.

Tons of fun, and 2 big shows with The Weezer, and Zedd 😍

(Friday) ARC326 : Chaos engineering : A proactive approach to system resilience

New designs we made are 99% biased by previous failures

Chaos engineering is a methodology to verify all assumptions made when building a new system.

Ie : Python requests timeout → ∞ by default. Same for mySQL connector.

“ Never assume. If you haven’t verified it, it’s probably broken “

Chaos engineering is a “compression algorithm for skills” :

Outages are what makes us senior engineers. Creating outages avoids forgetting high-level skills.

GameDays challenges are used internally at Amazon to train people on recovery.

→ GameDay : Creating Resiliency Through Destruction

The cost of downtime per hour :

> $300K for 90% of enterprises

Between $1M and $5M for 41% of enterprises

Companies with frequent outages faces cost up to 16x higher than those with less downtime.

AWS Fault Injection Service can be used to create failures and validate the expected behavior.

Demonstration with EKS architecture using ElastiCache and DynamoDB :

Assumption was “The request will go to the DB if the ElastiCache does not have the data or is down”

→ Added latency of 10 seconds to the ElastiCache connection

→ Assumption contradicted : no timeout configured on the Redis Connector

2nd assumption : the design provides resiliency in case of an availability zone failure

→ Simulated an AZ failure

→ Assumption contradicted : DynamoDB node was hard-coded on the app code

Experimentation loop :

- Define the steady state

- Identify the assumption

- Action !

- Analyze

- Investigate

- Repeat

Talk from BMW speaker. Lessons learned from chaos engineering : cross-team collaboration, leadership buy-in, psychological safety. Interesting links :

– GameDay : Creating Resiliency through Destruction (YouTube)

– AWS Fault Injection Service

(Friday) OPN402 – Gain expert-level knowledge about Powertools for AWS Lambda

PowerTools embeds core utilities for Python, TypeScript, Java and .NET

- Logging

- Metrics

- Tracer

- + many more

Logging with PowerTools allows using a custom formatter.

Also supports wide logs, which permits to generate a single log per invocation, adding keys to the pre-built log during the code execution steps.

Event Handling

How many functions do I need for my API ? It depends !

Single function (monolith pattern) :

- (+) Simplicity

- (+) Lower cold start chances

- (-) Higher cold start time (more imports)

- (-) Saling & quotas

- (-) Broad permissions

PowerTools offers routing / default routing / validation (pydantic) for monolith patterns.

+ OpenAPI spec schema and swagger automatically generated

Idempotency :

PowerTools can help to store previous calls on a third-party system and avoid returning a different output for an identical call.

- can use several external systems for persistence logging (DynamoDB, ElastiCache…)

- registers the Lambda context which permits to get the remaining time before execution context timeout (anticipating timeout : if the persistence layer does not ears back from the request, it means it has timed out and should be ran again if another call comes)

Roadmap : see links in presentation. Roadmap is really defined by customer demands : use Github issues or email.

Interesting links :

– GitHub repo : AWS PowerTools for Python

Advices for future re:Invent attendees

re:Invent is cool, you’ll meet tons of smart people, discover new products, learn about all the existing and new features of AWS, and will be invited to way more parties than you’ll able to attend.

However, it needs to be well prepared. I followed many advices I found on the Internet prior to planning my trip, and it really helped me have a good experience, while I met lots of attendees which were less prepared and had a terrible week.

Here are all the tips that I can only encourage you to follow if you want to take full advantage of re:Invent:

- Anticipate your trip : don’t book last time. First because you’ll pay full price for your flight, but also for your hotel. Las Vegas hosts 80k+ attendees for re:Invent. Also, if you plan soon enough (as I did), you can book your hotels through the re:Invent portal, which will allow you to stay at fancy places (I stayed at Bellagio), for half the normal price

- Anticipate your sessions scheduling : Almost all sessions (except keynotes and live retransmissions of technical breakouts on different locations) needs to be reserved. Reserved seatings booking opens around 1 month prior to the event : be on-line at the exact time it opens. Highly demanded sessions (like the ones I attended around Nitro system) were full in literally less than 2 minutes. The session catalog is available a few weeks prior to the seated bookings opening : mark all the sessions you are interested in as “favorites”, so that you’ll be able to find them quickly and just click on “Reserve a seat” as soon as the booking system opens.

- Don’t give up if you did not managed to book a seat for an interesting session : Even if you’ll be more comfortable having a well-planned schedule, you might still be able to attend a session that you found interesting but did not manage to reserve. Reserved seats are released 10 minutes prior to the beginning of the session (which means that people who booked the session but did not show up 10 minutes before the start time loose their seats, and people waiting in the “Walk-in” line will get those seats).

- Don’t be late at your reserved sessions : In line with the previous advice, if you don’t show up on the “Reserved” line at least 10 minutes prior to the beginning of the sessions you booked, your seat will be released and given to a “Walk-in” attendee. If it happens, you can still go to the “Walk-in” line, hoping their will be some remaining seats.

- Try to book your sessions on close locations : Las Vegas is (really, really) huge. Even if you always break the times announced by your guidance application for your walking trips, don’t think it will be the case there. You will easily have to walk more than 10 minutes just to exit an hotel and join the Las Vegas Boulevard. To avoid spending more time walking than attending conferences, try to plan your sessions on a given day as much as possible at the same location (take into consideration the keynotes location for that, if you want to attend them).

- Wear good (and comfortable) shoes : In any cases, you will walk a lot. I mean, A LOT. Believe me or not, while taking public transportations as much as possible (which are mainly free during the conference times for the full week if you wear your re:Invent attendee badge), I walked 87,4 km between Monday and Friday.

- Collect your badge and swag on Sunday : it will save you a lot of time on Monday morning, and will make you on-time for your first sessions. Note that there’s a badge collection desk directly at the LAS airport starting Sunday noon.

Some pictures !

One comment

Comments are closed.